FPGA Acceleration of Binary Weighted Neural Network Inference

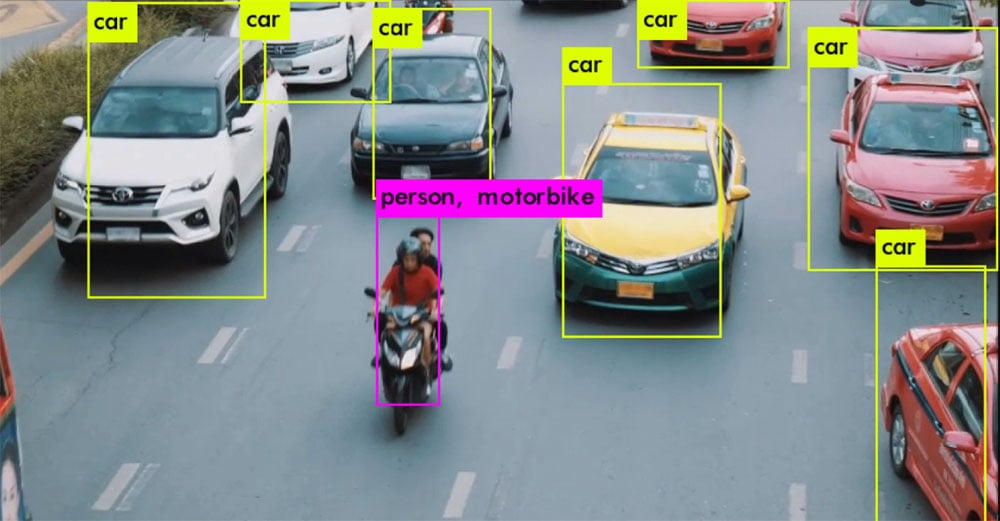

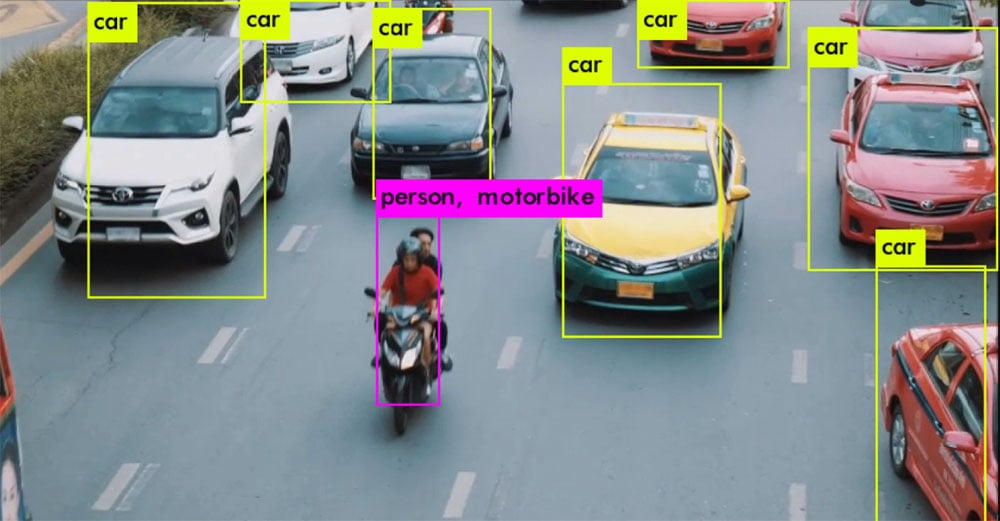

White Paper FPGA Acceleration of Binary Weighted Neural Network Inference One of the features of YOLOv3 is multiple-object recognition in a single image. We used

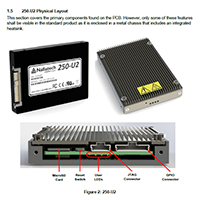

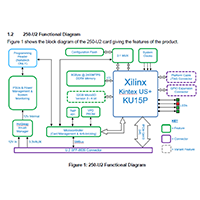

BittWare’s 250-U2 is a Computational Storage Processor conforming to the U.2 form factor. It features an AMD Kintex UltraScale+ FPGA directly coupled to local DDR4 memory. This energy-efficient, flexible compute node is intended to be deployed within conventional U.2 NVMe storage arrays (approximately 1:8 ratio) allowing FPGA-accelerated instances of:

The 250-U2 can be wholly programmed by customers developing in-house capabilities or delivered as a ready-to-run pre-configured solution featuring Eideticom’s NoLoad® IP. The 250-U2 is front-serviceable in a 1U chassis and can be mixed in with storage units in the same server, allowing users to mix-and-match storage and acceleration.

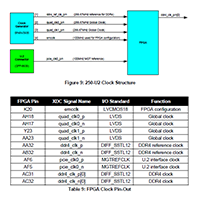

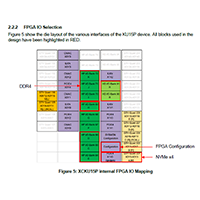

The HRG gives you much more detail about the card such as block diagrams, tables and descriptions.

r4 v0

Get extended warranty support and save time with a pre-integrated solution!

Our technical sales team is ready to provide availability and configuration information, or answer your technical questions.

"*" indicates required fields

White Paper FPGA Acceleration of Binary Weighted Neural Network Inference One of the features of YOLOv3 is multiple-object recognition in a single image. We used

Intel® oneAPI™ High-level FPGA Development Menu Evaluating oneAPI Accelerator Cards ASPs More Info Contact/Where to Buy Is oneAPI Right for You? You may already know

Introducing the IA-420f: A Powerful Low-Profile FPGA Card Powered by Intel Agilex Tap Into the Power of Agilex The new Intel Agilex FPGAs are more

White Paper Comparing FPGA RTL to HLS C/C++ using a Networking Example Overview Most FPGA programmers believe that high-level tools always emit larger bitstreams as